Deployment

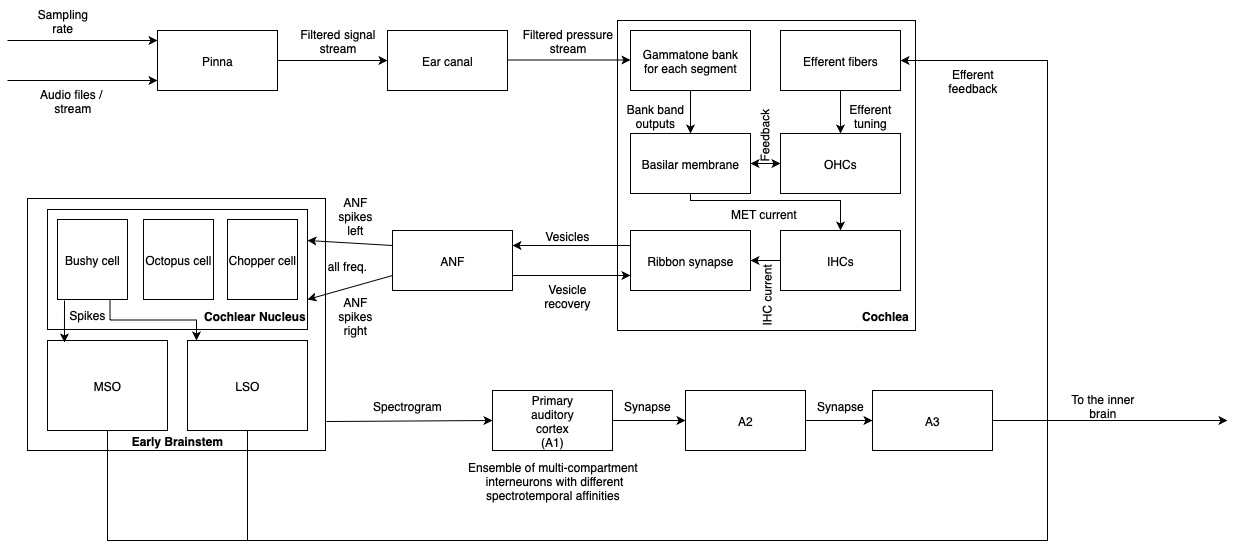

In the past two weeks, I’ve focused on integrating the (developed) cell functionalities together i.e., fixing mismatched types, data frequency, evaluating concurrency, etc. As they were built separately, a cohesive architecture (such as the one below) helps with integration. Since testing is predominant during the deployment phase, new algorithms are rarely developed and old ones are limited to type mismatch updates.

Enhanced Auditory Architecture

Algorithms / Development

CMU Development

Minor tweaks across the audio CMU, cochlea and cortex; to streamline cell methods into distinct cell creation, organization and functioning. Please find the changes in the commits (linked below for reference).

Development Activity - https://github.com/akhil-reddy/beads/graphs/commit-activity

Please note that some code (class templates, function comments, etc) is AI generated, so that I spend more of my productive time thinking and designing. However, I cross-verify each block of generated code with its corresponding design choice before moving ahead.

Next Steps

Deployment

- Overlaying video frames onto the retina, including code optimization for channel processing

- Post processing in the visual cortex

- Overlaying audio clips onto the cochlea, including optimization for wave segment processing

- Post processing in the auditory cortex

- Parallelization / streaming of cellular events via Flink or equivalent

Building the Environmental Response System (ERS)

- Building the ERUs

- Neurotransmitters - Fed by vision’s bipolar and amacrine cells, for example, to act on contrasting and/or temporal stimulus

- Focus - Building focus and its supporting mechanisms (of which acetylcholine is one)